Tomer Ullman is an assistant professor in the Department of Psychology at Harvard University, where he heads the Computation, Cognition, and Development Lab. Ullman studies intuitive theories and common-sense, with a particular interest in how people think about everyday objects (intuitive physics) and other people (intuitive psychology).

A post by Tomer Ullman

Imagine the following in your mind’s eye, as vividly as you can:

A person walks into a room, and knocks a ball off a table.

Hold the image in your mind for a moment.

Now consider, for the image you conjured before you: Did you imagine the color of the ball? How about the person’s hair, or clothes, or perceived gender? Did you imagine the position of the person relative to the ball? Can you trace through the air the trajectory that the ball took?

If you’re like most people, your answer to some of these questions was ‘yes’, and some ‘no’. Although you could easily fill in details as needed, you did not bother thinking about some of these properties when creating the original scene.

And that’s kind of weird.

Non-commitment has been noted (under different names) in both philosophy and cognitive science. In philosophy, the discussion of the phenomenon started in perception, for example asking how you can know a hen is speckled, without knowing how many speckles it has (Ayer, 1940). Similar questions were then asked of scenes before our mind’s eye: One can imagine a striped tiger without knowing how many stripes it has, or a purple cow without knowing its shade of purple (Shorter, 1952; Block, 1983; Dennett, 1986, 1993, and see also more recent discussions in Nanay, 2015, 2016, and Kind, 2017).

Speckled hens, striped tigers, and purple cows.

The phenomenon of non-commitment also played a bit part in the Mental Imagery Debate, in which it was used by people on the ‘mental images are more like propositions’ side to argue that it shows the format of mental images cannot be like that of real images (Pylyshyn, 1978, 2002). To this, supporters of the ‘mental images are more like pictures’ view responded that one can also be unsure of properties of images in direct perception, going back to the starting point of the phenomenon in philosophy: True, one can be unsure of how many stripes a tiger before our mind’s eye has, but one can also be unsure of how many stripes a real tiger has (Kosslyn et al., 2006).

Non-commitment was never center stage in the mental imagery debate, and even when a discussion about it took place, it happened largely unmoored from data. It also did not distinguish between different kinds of properties, though most of the properties discussed could indeed be fuzzy in perception (number of dots on dotted ceiling, exact shade of purple).

In a recent paper, with Eric Bigelow and senior co-author John McCoy (Bigelow, McCoy*, Ullman*) we empirically studied non-commitment in mental imagery, focusing on everyday scenes, and properties that should be obvious when one directly perceives an image. Across 5 studies (N>1,800) we asked people to imagine scenes such as “a person walks into a room, and knocks a ball off a table”, or “a person walks down a supermarket aisle and puts a piece of fruit in their bag”. Much like the opening example, we then asked people whether various properties (the color of ball, or the kind of fruit, etc.) were part of the scene they had imagined.

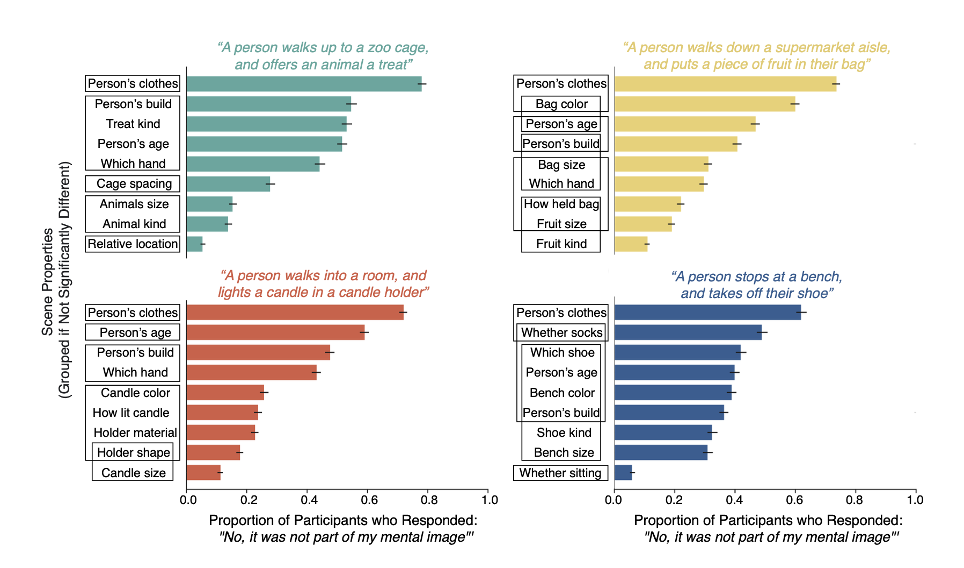

For any property we asked about, a significant number of people responded that it was not part of their mental image. Most people responded in this way for 2-4 properties of the 9 asked about. So, it seems non-commitment is commonplace in mental imagery, and applies to basic properties such as the color of a ball or which hand the person is holding a candle in. We found it interesting that non-commitment was also not uniformly spread: it wasn’t that people have some fixed probability for any property, of whether to include it in the scene or not. Some properties were much more likely to be committed to than others. For example, nearly all people committed to the relative location of a person with respect to an animal, or size of a fruit, but very few people committed to a person’s clothes, age, perceived gender, or build.

Results of Study 2: people imagined one of four scenes, and reported whether various properties were part of their mental image. Properties are grouped together if the proportions of participants answering ‘No’ are not significantly different.

To check whether people were simply using the response “No, it was not part of my mental image” to indicate fuzziness or forgetfulness, we ran the experiments again with other options, including “I don’t know”, “I don’t remember”, and “Other”. Hardly anyone made use of these options (~4%), replicating instead the pattern found in Studies 1 & 2. Also, while we recognize that vividness is a problematic measure (Kind, 2017), it seems people were treating vividness and non-commitment differently: several experiments showed together that vividness is distinguished from amount of detail: Even people who reported very high vividness did not commit to basic properties.

In our last study, we examined people’s reports when they were not given the explicit option of reporting non-commitment. That is, instead of having one of the options say “No, this was not part of my mental image”, we gave them the ability to provide an open response. People could in principle use the open response to say they did not bother thinking about certain properties. But that’s not what happened. Instead, for every property, nearly 100% of people provided detailed, often rich answers. For example, the first 4 studies showed that about 80% of people say that a person’s clothes were not part of their mental image, when given the explicit option to respond in this way. But, when simply asked for a free-form response, 99% of people provided answers such as “tight-fitted black shirt with a deep, circular neckline and long sleeves. They also wore skinny, light blue jeans, ripped at the ends of the jean cuffs. Their shoes were a white converse with a red streak at the bottom edge of the shoe.”

What to make of the gulf between the rich details people provide when given open-ended questions, and the consistent lack of commitment in the other studies? It is in principle possible that people create a full, rich, detailed image before their mind’s eye, and when asked to report it in an open-ended way simply do so accurately. While we cannot rule this option out (yet), we think a more parsimonious explanation is that people create sparse images, and fill in the details upon request. In other words, we think people are confabulating, much as they confabulate when they provide post-hoc, incorrect reasons for their decisions but not notice they’re doing so, or when they report false memories as true ones (Hirstein, 2009; Johnson and Raye, 1998; Nisbett and Wilson, 1977).

We think of our studies as only the starting point of a more thorough empirical and theoretical investigation of non-commitment in imagery. As just one possible direction to go in, we relied in all our studies on self-reports, and it would be cool to apply other recent measures for studying imagery to this topic (e.g. Morales and Firestone, 2023). Also, our data does not completely rule out the possibility that people did create rich mental images, and then failed to encode various properties of the scene, due to the same issues of attention and memory that plague perception. I personally find this option hard to believe, but you could test it empirically. For example, you could create different versions of real images that correspond to the scenes we asked about. Show such images to people for a brief period of time, and then ask them to report on the properties we considered. Depending on how brief or desaturated the image is, you would certainly get people to say that they didn’t notice some properties. But I would bet three nickels that there would be little to no correlation between what properties people didn’t notice in perception, and what properties they didn’t commit to in imagery.

More broadly, what do our results have to say about mental imagery, or the imagery debate? One could see the findings as a feather in the cap for the propositional, anti-depictive view. For my part, I think they point to a different option. The following goes beyond the current data, and doesn’t necessarily reflect the views of the co-authors, but here goes: I think mental imagery proceeds along two different computational streams, perceptual and physical, much like the separation that exists in many current real-time simulators such as those used in video games (Ullman et al., 2017). If you were an engineer designing such a game, and your boss barged into your office and demanded you create a scene of “a person knocking a ball off a table”, you would not begin by individually coloring in pixels on a screen, or by creating a sketch. You would create allocentric object assets, whose properties can be left unspecified, and place the objects in space relative to one another. Such a representation is not just a spatial map, the objects have physical properties like size. orientation, trajectory. On the basis of this representation one could separately “render” a visual image – a pixel-based representation on which further computation could be done – but the rendering step isn’t necessary. The physical-spatial representation can be used to answer many of the questions we associate with visual imagery, without rendering. And, it can fill in things as needed on demand. I think it’s no coincidence that non-commitment patterned the way it did in our studies, with many people being committed to the relative location of objects or their sizes, and many fewer being committed to colors and textures. Such a proposal is in line with other findings of approximations in mental simulation (e.g. Bass et al., 2021, Li et al., 2023). It will take more theoretical and empirical work to firmly establish this proposal, but I’m pretty committed to it.

References

Ayer, A. J. (1940). The foundations of empirical knowledge.

Bass, I., Smith, K. A., Bonawitz, E., & Ullman, T. D. (2021). Partial mental simulation explains fallacies in physical reasoning. Cognitive Neuropsychology, 38(7-8), 413-424.

Bigelow, E. J., McCoy*, J. P., & Ullman*, T. D. (2023). Non-commitment in mental imagery. Cognition, 238, 105498.

Block, N. (1983). The photographic fallacy in the debate about mental imagery. Nous, 651-661.

Dennett, D. C. (1986). Content and consciousness.

Dennett, D. C. (1993). Consciousness explained. Penguin UK.

Hirstein, W. (Ed.). (2009). Confabulation: Views from neuroscience, psychiatry, psychology and philosophy. Oxford University Press, USA.

Johnson, M. K., & Raye, C. L. (1998). False memories and confabulation. Trends in cognitive sciences, 2(4), 137-145.

Kind, A. (2017). Imaginative vividness. Journal of the American Philosophical Association, 3(1), 32-50.

Kosslyn, S. M., Thompson, W. L., & Ganis, G. (2006). The case for mental imagery. Oxford University Press.

Li, Y., Wang, Y., Boger, T., Smith, K. A., Gershman, S. J., & Ullman, T. D. (2023). An approximate representation of objects underlies physical reasoning. Journal of Experimental Psychology: General.

Morales, J., & Firestone, C. (2023). Philosophy of Perception in the Psychologist’s Laboratory. Current Directions in Psychological Science, 09637214231158345.

Nanay, B. (2015). Perceptual content and the content of mental imagery. Philosophical Studies, 172, 1723-1736.

Nanay, B. (2016). Imagination and perception. The Routledge handbook of philosophy of imagination, 124-134.

Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes. Psychological review, 84(3), 231.

Pylyshyn, Z. (1973). What the minds eye tells the minds brain. PsychologicalBulletin 80: 124.[PPS](1978) Imagery and artificial intelligence. Minnesota studies in the philosophy of science, 9.

Pylyshyn, Z. W. (2002). Mental imagery: In search of a theory. Behavioral and brain sciences, 25(2), 157-182.

Shorter, J. M. (1970). Imagination. Ryle, 137-155.

Ullman, T. D., Spelke, E., Battaglia, P., & Tenenbaum, J. B. (2017). Mind games: Game engines as an architecture for intuitive physics. Trends in cognitive sciences, 21(9), 649-665.